#2: Gestures

Technology has rapidly increased the pace of our day-to-day life. Our most mundane tasks have been analyzed and streamlined for the sake of efficiency, and our methods of communication are no exception. With the help of emojis, lols, and memes we’re able to say more than ever using less. But what gets lost in translation when speed becomes our only measure of success? Typography of Speed is a regular column by Felix Salut that explores past, present, and future examples of language accelerated by technology. More soon… ttyl!

The Upside Down

JOYCE: Blink once for yes and twice for no. Can you do that for me, sweetie?

In the first season of the Netflix series “Stranger Things,” Joyce Byers (Wynona Ryder) finds an inventive way of communicating with her son Will, who’s lost to the Upside Down—a bleak, life-threatening shadow world ruled by evil. When she discovers that Will is able to communicate by flickering the Christmas lights via telepathic transmission, she creates her own text input device by painting the alphabet on the wall of her living room. Each letter is connected to a different light bulb, allowing Will to spell out his responses in a kind of optical morse code.

Stranger Things (2016)

JOYCE: Ok, baby. Talk to me. Talk to me. Where are you?

WILL: (spells through coloured light bulbs)

R-I-G-H-T H-E-R-E.

JOYCE: I don’t know what that means. I need you to tell me what to do. What should I do? How do I get to you? How do I find you? What should I do?

Will: R–U–N.

I thought of this scene recently while reading an article in the Guardian. It told the story of a group of scientists who have, for the first time, detected the brain signals linked to the thought process of writing letters by hand. Working with a person with paralysis who had sensors implanted in his brain, the team used a computer algorithm to identify the letters he was thinking of as he attempted to write. The system displayed these thought letters in real time as rendered text on a screen. It seems our reality is not so far off from the sci-fi telepathy of “Stranger Things” seen on TV.

In this column, “Typography of Speed: Gestures” I write about language and speed, and how writing systems can be accelerated through advances in technology. The “brain-to-text” experiment is a recent, fascinating, potentially a little bit scary example of this: What if we could write on technical devices by merely thinking of letters?

Though perhaps not in the obvious sense, writing by hand, keystroke, mouse or by speech are gestures, and they are subsequent points in a long history of gesture accelerating written language. As seen today in the ways we swipe and tap to communicate on our phones, gesture and technology together have vast potential to alter the way we write and communicate. In this column, we’ll look back on an abridged history of gesture that has been central to communication aided by computerized technology.

A patient with limb paralysis imagined writing letters of the alphabet. Sensors implanted in his brain picked up the signals, and algorithms transcribed them onto a computer screen.

Drag, Point, Click

One of the earliest beginnings of experimenting with gesture input took place around 1980 at the MIT Architecture Machine Group’s Media Room, a kind of three-dimensional version of the computer desktop the size of a personal office. The room had a large screen covering an entire wall and a black Eames lounge chair in the center, where one could sit and perform commands via voice, joystick, and a trackpad, attached to the arm of the chair.

‘Put hat There’ conducted by Richard A. Bolt in 1980

The video above shows the Media Room being used in a project called “Put That There” (1980), a voice and gesture interactive system used to build and modify graphics on a screen. Watching it at the time of release must have felt like seeing a science fiction movie; personal computers had just been introduced for the mass market and voice recognition was only known to most people via HAL 9000, the trippy computer from Stanley Kubrick’s 2001: A Space Odyssey (1961). Today, “Put That There” is a fascinating example of a very early quest for a more immediate input approach via gesture.

But even as advanced as it was for the time, the gestures used in “Put That There” were simply taken from the classic actions of a mouse (drag, point, and click) and extended into a three- dimensional space. In a talk in 1984, however, MIT Media Lab founder Nicholas Negroponte advocated for a much more direct way of approaching the interface using finger input via touch screens. In this excerpt from his talk, he points out how many gestures are necessary to perform with the mouse as an input device:

“When you think for a second of the mouse on Macintosh—and I will not criticize the mouse too much—when you’re typing, first of all, you’ve got to find the mouse. You have to probably stop, you find the mouse, and you’re going to have to wiggle it a little bit to see where the cursor is on the screen. And then when you finally see where it is, then you’ve got to move it to get the cursor over there, and then—‘bang’—you’ve got to hit a button or do whatever. That’s four separate steps, versus typing and touching and typing and touching, just doing it all in one motion, or one-and-a-half, depending on how you want to count. (…) What are other advantages? Well, one advantage is that you don’t have to pick them up. And, people don’t realize how important that is, not having to pick up your fingers to use them.”

Of course, Negroponte’s insistence that touch screens would make communication quicker and easier foreshadows the ways we all now use our phones, but his prediction would not come to pass until many years later.

HAL 9000 from 2001: A Space Odyssey (1961)

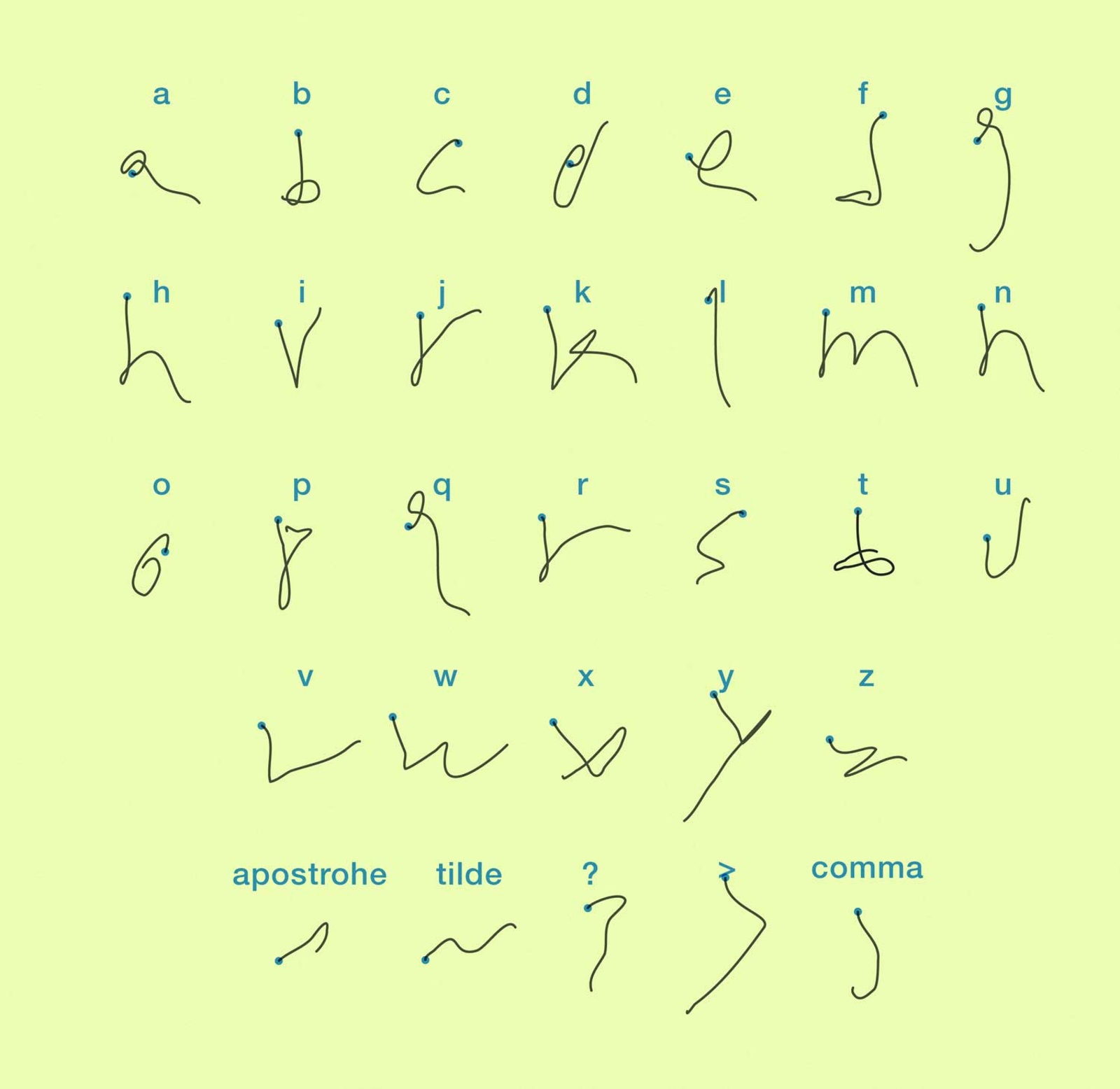

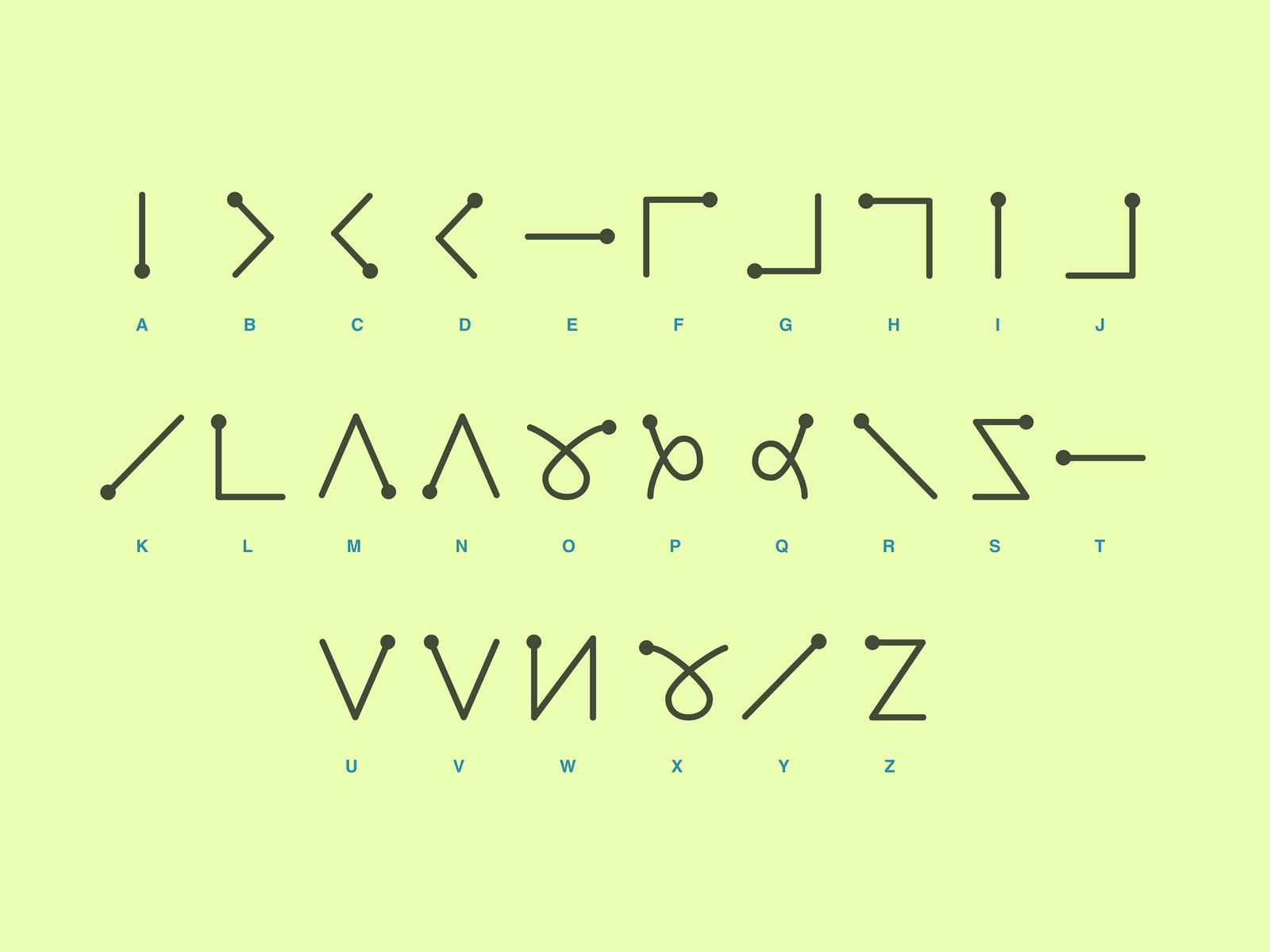

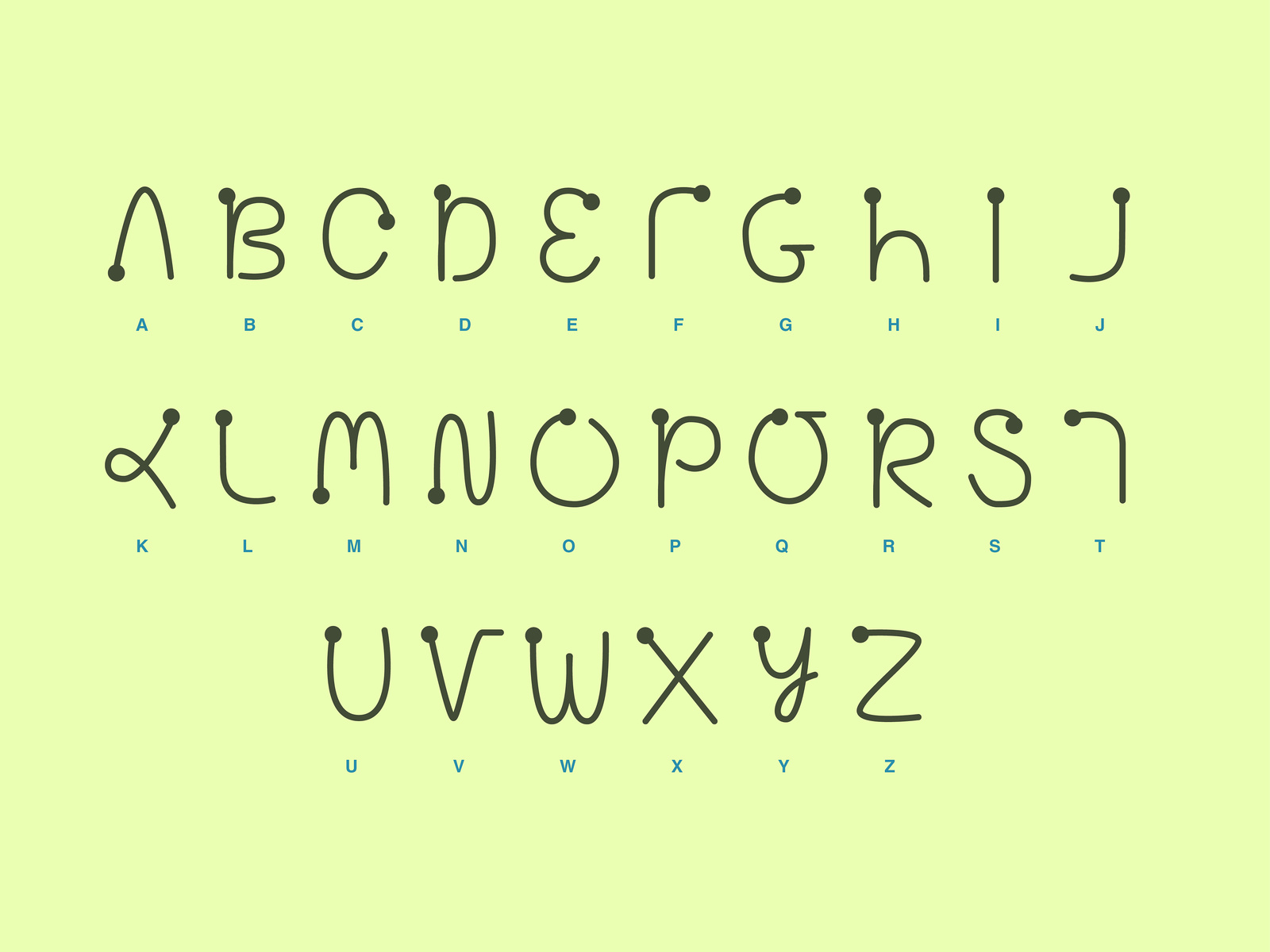

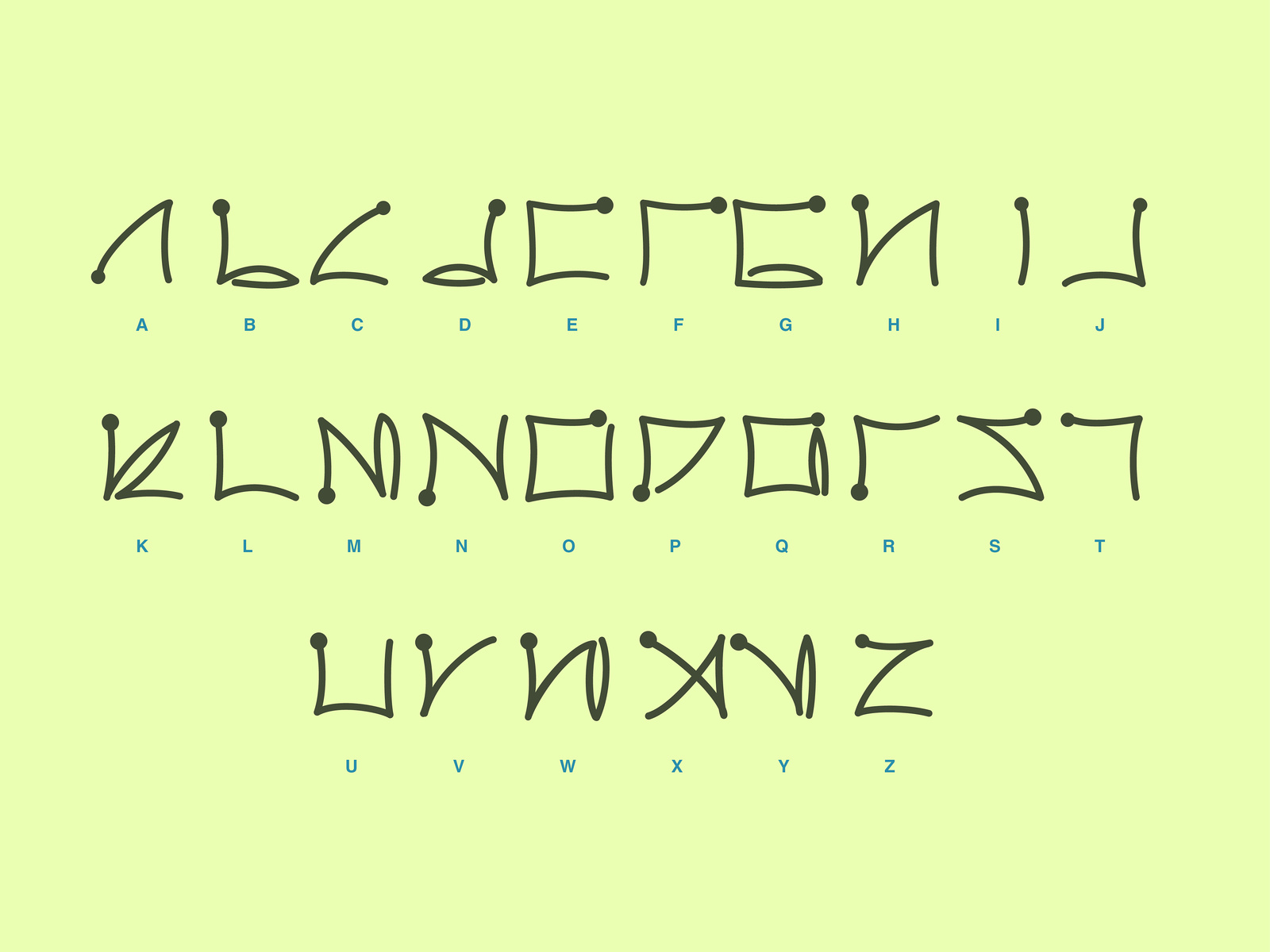

Casio DB 1000 Input Alphabet

Unistroke Alphabet

Graffiti Alphabet

EdgeWrite alphabet

Touch, Stylus, Slide-to-Text

In 1985, a year after Negroponte’s talk—and the same year that the movie Back to the Future came out—Casio released the world’s first smart watch. The Casio DB-1000 incorporated technology that felt years before its time: a 50-name databank, an eight-digit calculator, five alarms clocks (including a feature that allowed for setting them for individual days), a chronograph, and the usual day and date functions. But most special of all was the touchscreen, which didn’t just show the numbers or letters for you to tap. Instead, you drew the characters directly on the screen with a fingertip. Casio even developed their own input alphabet. Yet as prescient as it was, the watch was only sold for three months before disappearing from the market.

It was nearly 10 years before two other handwriting input systems—this time also incorporating the stylus—emerged: Graffiti and Unistrokes. Whereas Graffiti, which was developed by Palm Inc for the Palm Pilot, is based on the latin alphabet, Unistrokes, developed by Xerox, is an abstraction of letterforms, its gestures bare little resemblance to Roman letters. However, each letter is assigned a short stroke, with frequent letters (e.g., E, A, T, I, R) associated with a straight line. Both systems share the idea of a single-stroke gesture alphabet for more efficient input.

In the quest for an even more efficient way to input text, other so-called “continuous text entry systems” were invented shortly after Graffiti and Unistrokes. Systems like Quikwrite, Shapewriting, Swype, and Slide-to-Text all share the idea that the user enters words by sliding a finger or stylus on top of a virtual keyboard, lifting up only between words. This function has been developed in combination with predictive text and is standard to almost all smartphones today.

Pinch, Zoom, Tap, Double Tap, Swipe, Slide, Scroll

In his legendary presentation in 2007, Steve Jobs introduced Apple’s first iPhone with the following words: “Every once in a while a revolutionary product comes along that changes everything.” Though it seemed like typical marketing-speak at the time, that statement has proven to be true. By removing physical keyboards from cell phones and introducing a single large touch screen, Apple and the iPhone introduced a bevy of shortcut gestures that today form the basic vocabulary of digital interaction. Pinch, zoom, tap, double tap, swipe, slide, and scroll have all contributed to the increased speed and efficiency of communicating through technical devices.

There is a fine line between gestures that serve direct text input, such as the handwriting and shapewriting examples mentioned above, and gestures that give birth to a totally new gestural vocabulary. The first category tries to mimic actions we know from other media, while the second category can be seen as total game changers.

Casio DB-1000

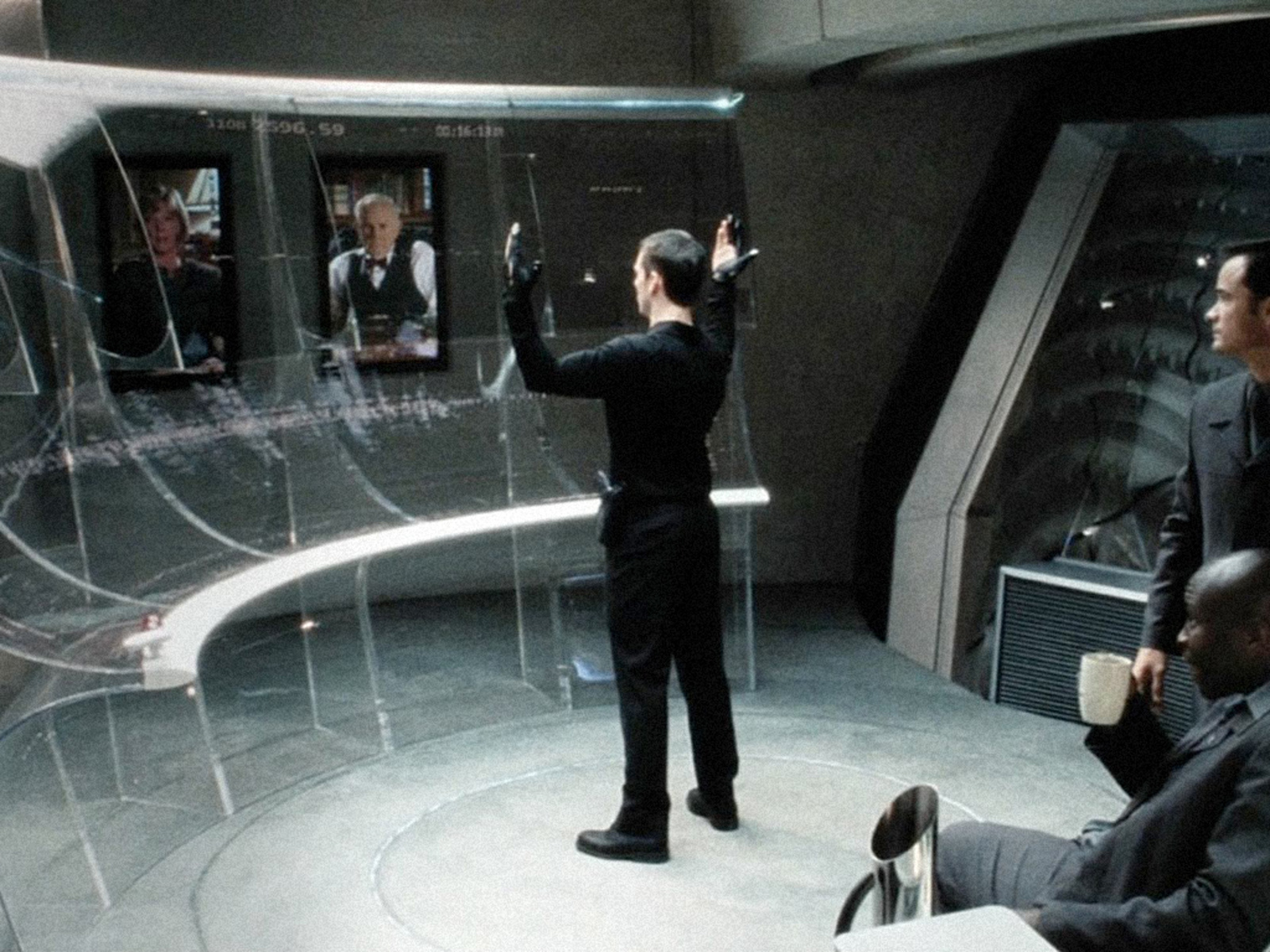

g‑speak SOE (spatial operating environment) developed over three decades by MIT Media Lab

g‑speak SOE (spatial operating environment) developed over three decades by MIT Media Lab

g‑speak SOE (spatial operating environment) developed over three decades by MIT Media Lab

g‑speak SOE (spatial operating environment) developed over three decades by MIT Media Lab

Conducting an Invisible Orchestra

Prior to the making of the sci-fi film Minority Report, set in 2054, Steven Spielberg gathered a group of specialists for a three-day think tank dedicated to dreaming up and thinking through the technology that creates the film’s universe. This resulted in the movie’s signature piece of technology: a gesture interface with which Tom Cruise interacts with the PreCrime system central to the film. According to John Underkoffler, a technical adviser for the film, Spielberg wanted using the interface to look like conducting an orchestra. Underkoffler took that idea and developed it, elaborating on work done by himself and others at MIT, where he was a researcher at the time.

Gesture interface from Minority Report (2004)

Over the past years, gesture has become increasingly more important for fast data input. With hand gesture recognition vastly improved, gesture now also plays an important role in the 3D and virtual space, and not only on touch screens. Google, for example, researches how to directly register gestures via radar sensor. As Ivan Poupyrev from the Google Advanced Technology and Projects Group describes it:

“The hand is the ultimate input device. It’s extremely precise, it’s extremely fast, and it’s very natural for us to use it. Capturing the possibilities of the human hand was one of my passions. How could we take this incredible capability, the finesse of human actions, and finesse of using our hands, but apply it to the virtual world? We use radio frequency spectrum, or radars, to track the human hand. Radars have been used for many different things, to track cars, big objects, satellites, planes. We use them to track micro motions, twitches of the human hands, and then use that to interact with wearables and Internet of Things and other computer devices.”

With this technology, finger movements are performed in the air: you can push a virtual button, move a virtual slider, or turn a virtual knob, without touching anything at all. But Google’s radar research does not stop with exploring hand gestures. It also experiments with the interpretation of other nonverbal interactions, such as our body movements. As Google puts it:

“We are inspired by how people interact with one another. As humans, we understand each other intuitively—without saying a single word. We pick up on social cues, subtle gestures that we innately understand and react to. What if computers understood us this way?”

If this research becomes reality, and if the brain-to-text technology becomes more widespread, the gesture of the future will become almost invisible—as if guided by a mysterious force.

Radar powered gesture control on Google’s Pixel 4